SIGGRAPH 2010

Well, I didn’t get to make it to SIGGRAPH this year — but, as always, Ke-Sen Huang’s SIGGRAPH Paper Archive has us covered. Here’s some papers that caught my eye:

The Frankencamera: An Experimental Platform for Computational Photography

Is there any realm of technology that moves faster than digital imaging? Consumer cameras are on a six month product cycle, and the level of imaging we’re getting out of professional gear nowadays absolutely (and finally) blows away what we were able to do with film. And yet, there is so much more possible. Much of the intelligence inside consumer and professional cameras is locked away. While there are some efforts at exposing scriptability (see CHDK for Canon consumer/prosumer cams), the really low level stuff remains just out of reach.

That’s changing. A group out of Stanford is creating the Frankencamera — a completely hackable, to the microsecond scale, base for what’s being referred to as Computational Photography. Put simply, CCDs are not film. They don’t require a shutter to cycle, their pixels do not need to be read simultaneously, and since images are actually fundamentally quite redundant (thus their compressibility), a lot can be done by merging the output of several frames and running extensive computations on them, etc. Some of the better things out of the computational photography realm:

- Flutter Shutter Photography: If you ever wanted “Enhance” a la CSI, this is how we’re going to get it.

- Light Field Photography With A Handheld Camera: What if you didn’t need to focus? What if you could capture all depths at once?

Getting these mechanisms out of the lab, and into your hands, is going to require a better platform. Well, it’s coming 🙂

Parametric Reshaping of Human Bodies in Images

Electrical Engineering has Alternating Current (AC) and Direct Current (DC). Computer Security has Code Review (human-driven analysis of software, seeking faults) and Fuzzing (machine-driven randomizing bashing of software, also seeking faults). And Computer Graphics has Polygons (shapes with textures and lighting) and Images (flat collections of pixels, collected from real world example). To various degrees of fidelity, anything can be built with either approach, but the best things often happen at the intersections of each tech tree.

In graphics, a remarkable amount of interesting work is happening when real world footage of humans is merged with an underlying polygonal awareness of what’s happening in three dimensional space. Specifically, we’re getting the ability to accurately and trivially alter images of human bodies. In the video above, and in MovieReshape: Tracking and Reshaping of Humans in Videos from SIGGRAPH Asia 2010, complex body characteristics such as height and musculature are being programmatically altered to a level of fidelity that the human visual system sees everything as normal.

That is actually somewhat surprising. After all, if there’s anything we’re supposed to be able to see small changes in, it’s people. I guess in the era of Benjamin Button (or, heck, normal people photoshopping their social networking photos) nothing should be surprising, but a slider for “taller” wasn’t expected.

Unstructured Video-Based Rendering: Interactive Exploration of Casually Captured Videos

In The Beginning, we thought it was all about capturing the data. So we did so, to the tune of petabytes.

Then we realized it was actually about exploring the data — condensing millions of data points down to something actionable.

Am I talking about what you’re thinking about? Yes. Because this precise pattern has shown up everywhere, as search, security, business, and science itself finds themselves absolutely flooded with data, but not quite so much as much knowledge of what to do with all that data. We’re doing OK, but there’s room for improvement, especially for some of the richer data types — like video.

In this paper, the authors extend the sort of image correlation research we’ve seen in Photosynth to video streams, allowing multiple streams to be cross-referenced in time and space. It’s well known that, after the Oklahoma City bombing, federal agents combed through thousands of CCTV tapes, using the explosion as a sync pulse and tracking the one van that had the attackers. One wonders the degree to which that will become automatable, in the presence of this sort of code.

Note: There’s actually a demo, and it’s 4GB of data!

Second note: Another fascinating piece of image correlation, relevant to both unstructured imagery and the unification of imagery and polygons, is here: Building Rome On A Cloudless Day.

In terms of “user interfaces that would make my life easier”, I must say, something that does seeking better than the “sequence of tiny freeze frames” (at best) or “just a flat bar I can click on” (at worst) into “a jigsaw puzzle of useful images” would be mighty nice.

(See also: Video Tapestries)

You know, some people ask me why I pay attention to pretty pictures, when I should be out trying to save the world or something.

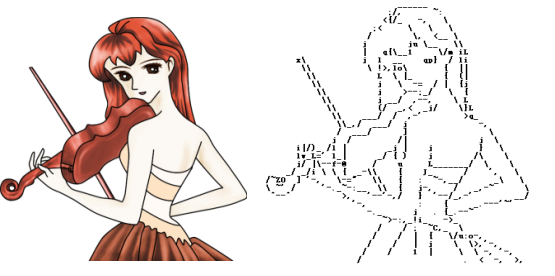

Well, lets be honest. A given piece of code may or may not be secure. But the world’s most optimized algorithm for turning Anime into ASCII is without question totally awesome.

Wow!!! This is AMAZING!!! I “re-blogged” the unstructured VBR video for my friends.. just for you to know.. good job with your blog.. and greetings from spain!